Configure Multiple Nodepool Implementations

Lucidworks recommends isolating search workloads from analytics workloads using multiple node pools. The included scripts do not do this for you; this is a manual process.

See Workload Isolation with Multiple Node Pools for more information.

-

Run the

customize_fusion_values.shscript with the following arguments:./customize_fusion_values.sh \ -c demo \ (1) -n fusion-lw \ (2) --provider k8s \ --node-pool {} \ (3) --with-resource-limits \ --with-affinity-rules \ --with-replicas1 The name of your cluster. This example uses demo.2 The name of your namespace. 3 By using the empty value {}as the value for the--node-poolargument, the script leaves thenodePoolsvalues unassigned. Assign these values in a later step.The example above outputs the following:

Created Fusion custom values yaml: k8s_ demo_fusion-lw_fusion_values.yaml Created Monitoring custom values yaml: k8s_ demo_fusion-lw_monitoring_values.yaml. Keep this file handy as you'll need it to customize your Monitoring installation. Create k8s_ demo_fusion-lw_upgrade_fusion.sh for upgrading you Fusion cluster. Please keep this script along with your custom values yaml file(s) in version control. Update nodeSelector values for k8s_ demo_fusion-lw_fusion_values.yaml and update the solr to use nodepool -

Update Solr in

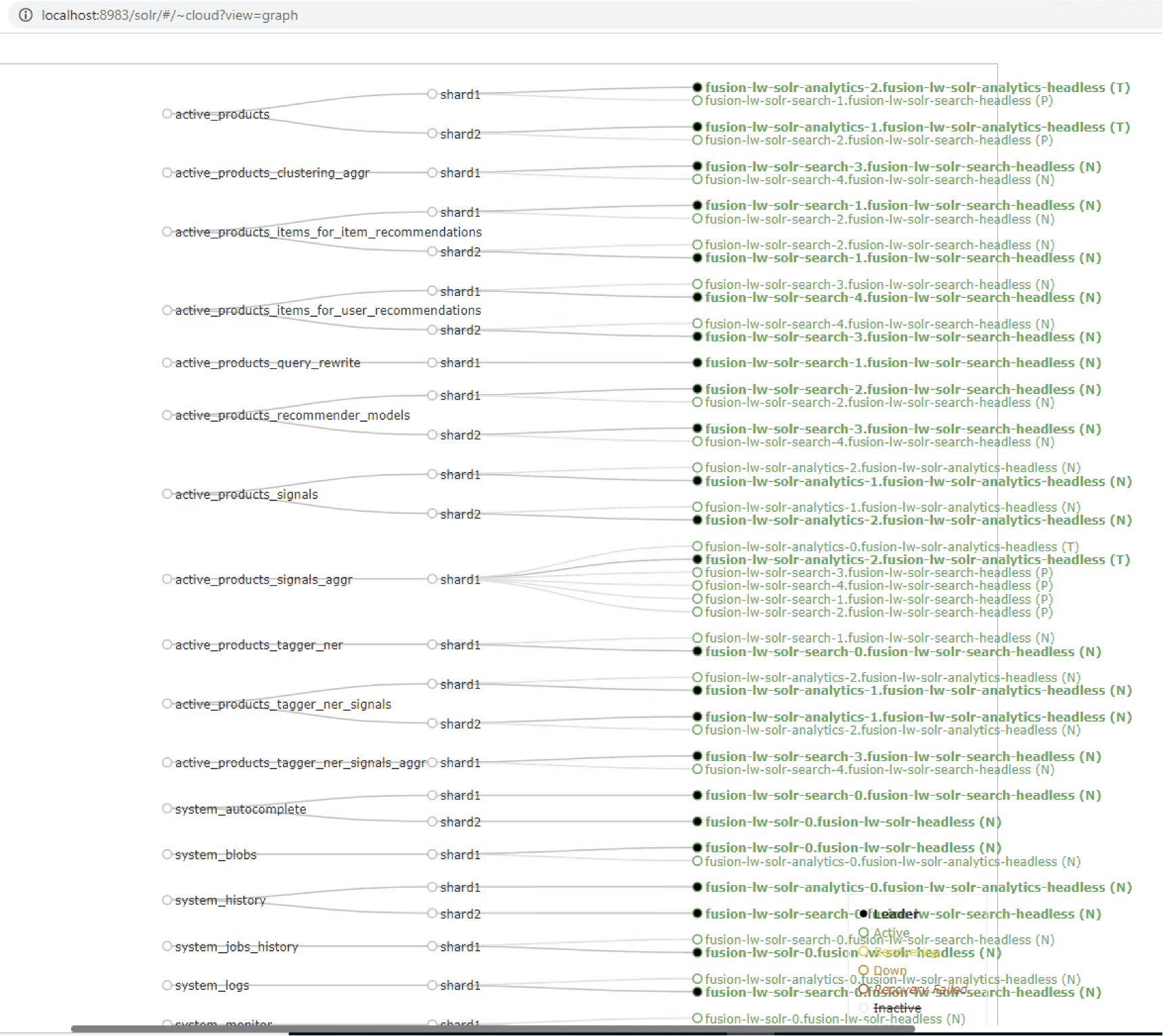

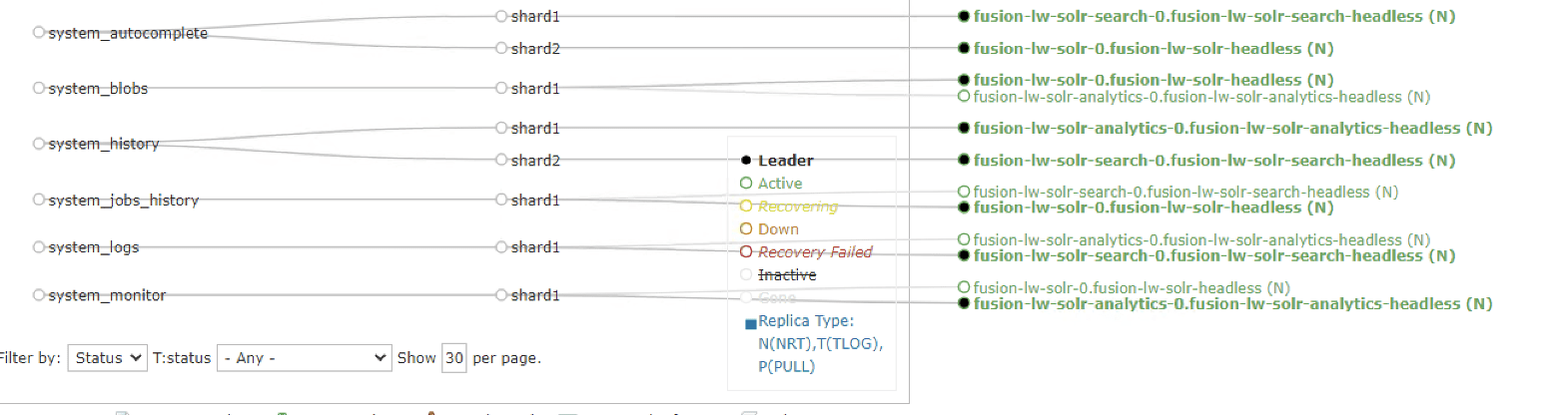

k8s_demo_fusion-lw_fusion_values.yamlto addnodePools. Thefusion_node_typevalue connects the SolrnodePoolsvalues to the corresponding node pool.solr: nodePools: - name: "" (1) - name: "analytics" (2) javaMem: "-Xmx6g" replicaCount: 6 storageSize: "100Gi" nodeSelector: fusion_node_type: analytics (3) resources: requests: cpu: 2 memory: 12Gi limits: cpu: 3 memory: 12Gi - name: "search" (4) javaMem: "-Xms11g -Xmx11g" replicaCount: 12 storageSize: "50Gi" nodeSelector: fusion_node_type: search (5) resources: limits: cpu: "7700m" memory: "26Gi" requests: cpu: "7000m" memory: "25Gi" nodeSelector: cloud.google.com/gke-nodepool: default-pool (6) ...1 The empty string ""is the suffix for the default partition.2 Overrides the settings for the analytics Solr pods. 3 Assigns the analytics Solr pods to the node pool and attaches the label fusion_node_type=analytics. You can use thefusion_node_typeproperty in Solr auto-scaling policies to govern replica placement during collection creation.4 Overrides the settings for the search Solr pods 5 Assigns the search Solr pods to the node pool and attaches the label fusion_node_type=search.6 Sets the default settings for all Solr pods, if not specifically overridden in the nodePoolssection above.Do not edit the nodePoolsvalue"".Only use a single fusion_node_typevalue per SolrnodeSelectorvalue. -

Update

k8s_demo_fusion-lw_fusion_values.yamlto add appropriatenodeSelectorvalues for all desired services. -

Run the

upgrade_fusion.shscript:./k8s_demo_fusion-lw_upgrade_fusion.shThe output resembles the following:

namespace/fusion-lw created Created namespace fusion-lw with owner label Hang tight while we grab the latest from your chart repositories... ... (1) NAME: fusion-lw LAST DEPLOYED: Wed Sep 15 18:25:55 2021 NAMESPACE: fusion-lw STATUS: deployed REVISION: 1 ... NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION fusion-lw fusion-lw 1 2021-09-15 18:25:55.1470127 -0500 CDT deployed fusion-5.4.2 5.4.21 The …indicates some parts of the output are removed to shorten the example response. -

Install Prometheus and Grafana so you can monitor Fusion metrics by running the following:

./install_prom.sh --provider k8s -c demo -n fusion-lwThe output resembles the following:

Adding the stable chart repo to helm repo list "stable" has been added to your repositories Installing Prometheus and Grafana for monitoring Fusion metrics ... this can take a few minutes. ... NAME: fusion-lw-monitoring LAST DEPLOYED: Wed Sep 15 22:59:28 2021 NAMESPACE: fusion-lw STATUS: deployed REVISION: 1 NOTES: The Prometheus server can be accessed via port 80 on the following DNS name from within your cluster: fusion-lw-monitoring-prometheus-server.fusion-lw.svc.cluster.local ... Successfully installed Prometheus and Grafana into the fusion-lw namespace. NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION fusion-lw fusion-lw 1 2021-09-15 18:25:55.1470127 -0500 CDT deployed fusion-5.4.2 5.4.2 fusion-lw-monitoring fusion-lw 1 2021-09-15 22:59:28.9054416 -0500 CDT deployed fusion-monitoring-1.0.0 1.0.0 -

Update the Solr collections to use specific nodes. Apply the Solr policy and run

update_app_coll_layout.sh. This script deletes the collections and recreates them with the new policy. Check the scripts for details on collection names.